This essay originally was published on December 8, 2022, with the email subject line "CT No. 145: Let the bots reignite your snobbery."

If you’ve been paying attention to all the machine-generated content that’s flooding the internet this year, you might be concerned with the state of digital content industries, wondering if you will have a job in a few years. If this sounds like you, follow this advice:

Never work for someone who would replace you with a robot if they had the chance.

Yes, it’s challenging to follow. If you work for any company with a CFO, chances are high they would replace you with a robot immediately when given the opportunity. But in general, if you have the privilege of being selective about your employer, make sure at least the CEO values your humanity on a fundamental level, and bring that humanity to work.

Because here’s the thing about what’s now being called AIG (AI-generated) content*: The companies who would use machine-generated content for copywriting or illustration were never going to pay you a living wage anyway. They’re happy they no longer have to go on Fiverr and search for the cheapest possible human illustrator or copywriter, someone whose work they’re using to fill in the web templates with content. Business leaders who think AI will solve their problems swear that as long as they have the content in place, the business will do just as well.

They’re wrong, of course. But the problem is that businesses are inundated with bad copy and bad internet content: most of what they read on internet forums is bad copy and pretty much every other B2B website, white paper and blog post they read has subpar copy.

Bad copy abounds because:

- Most online business writing is bad on every level, from idea to sentence

- because many tech businesses have not valued good writing

- because simply being in the digital marketplace and using ad tech at a basic level has, for a long time, meant that anyone could build a fast-growing business where they didn’t have to care about content quality

Business owners who don’t care about content quality also don’t believe in building brand equity, understanding context or valuing originality; they just want something in place so they can go back to executing on their ad-based business model.

But we’re at the point with technology adoption where simply buying ad impressions doesn’t reap the easy benefits it used to. In the past five years, most businesses and marketers have realized that purchased visibility doesn’t scale without a strong brand, quality content, and stellar digital customer experiences.

Luckily for us, the leader of the tech pack seems to have thrown down some sort of gauntlet.

*I hate this term and much prefer “machine-generated content” or “natural language generation," which is what we were calling the tech before the most recent hype cycle.

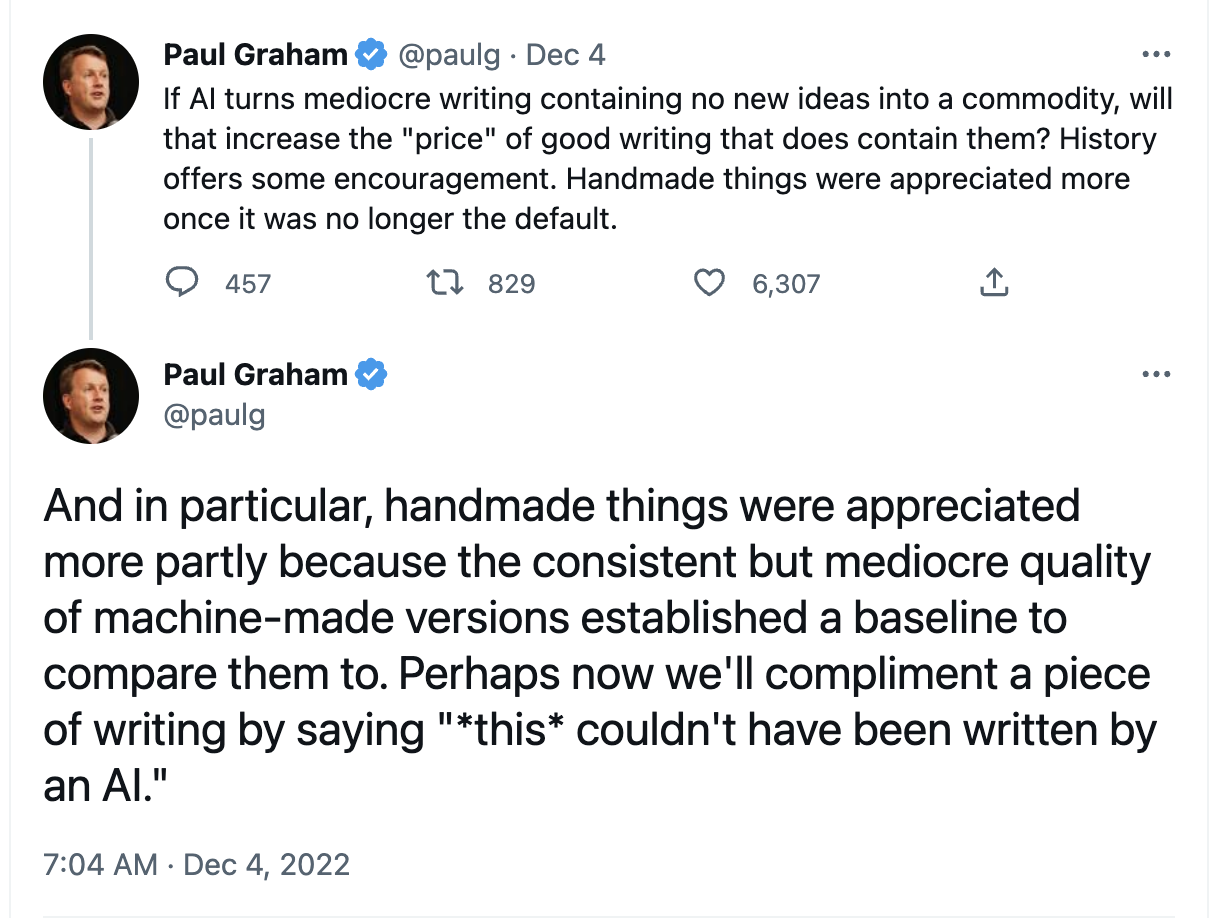

The billionaire said it: Paul Graham recognizes that quality content and craft are important

If you’re unfamiliar, billionaire Paul Graham is a founder of YCombinator, the tech accelerator responsible for many of your favorite online businesses, as well as the ones you hate. He is an venture capitalist writes meandering blog posts with marginal value other than that he is a very rich man, and people listen to whatever he says in hopes they, too, will become rich.

And this week, to my shock and horror, I agreed with him.

What-ho, Paul! I’m happy to chat with you about your misperception of the effects of industrialization on artists, but, putting that aside, are you talking about how you value writing craft, the process of shaping meaning through considered language? A word and concept that’s been cast aside by the technology industry for decades in favor of logic, speed and efficiency?

Perhaps now we’ll compliment a piece of writing indeed.

In the above tweet, Graham is writing about ChatGPT, a new natural language generation (NLG) product from OpenAI (his wife is an investor, and he’s cozy with CEO Sam Altman). If you aren’t familiar with ChatGPT, just look it up on literally any online platform with a search function and you’ll see plenty of examples from the past week. If you want a more in-depth explainer, here’s NYT’s Kevin Roose in typically breathless style, embedding tweets instead of conducting original reporting (and quoting zero women, which tends to happen in tech coverage).

ChatGPT responds to open-ended text queries with paragraphs of text-based answers. It can create script-like dialog, write code, and provide somewhat factual answers for questions, as long as they are not about individual people or events after 2021.

ChatGPT is imperfect for many reasons: it spits out wrong answers, useless equivocations, and empty words in the thousands. Even Ben Thompson points out that it’s completely wrong.