Happy May Day! Don't let them tell you a robot can do your job better than you can. Nolite te bastardes carborundorum.

—DC

The front door to discovery: How natural language processing is the key to website visibility in LLMs

A decade ago, my colleague explained how natural language processing (NLP) was supposed to work. "Let's say you search for 'who is Aaron Rodgers,'" he said. "And then your follow-up search would be, 'How tall is he?' and Google would give you the right answer."

"How tall is he?" has been my NLP litmus test since then. While it's the kind of question a child could easily answer, it's a deceptively complex question for a computer, because it requires:

- memory of the immediately preceding search query

- understanding that "he" is in reference to the proper noun "Aaron Rodgers" and not the subject of the sentence, which is "who,"

- the context that English doesn't structure its sentences like other languages. We don't say, as the phrase translates in Spanish, "How does he measure?" (and if an English speaker were to use that specific phrasing, the intent might be for a very different kind of content).

The conversational phrase "how tall is he?" transforms traditional keyword-first search. It contains no nouns, which are the bedrock of pretty much all query processing. (If you've ever worked with me on content optimization, I always encourage more nouns and fewer pronouns in headlines and copy, which orients both algorithms and busy, distracted people.) It's asking for a specific fact about a known entity without clues as to who that entity is.

Google proper still doesn't process a follow-up query like "how tall is he?" but LLMs do. For a student of search and language processing, the reading of the query provided is multiples more interesting than the generated output. The fact that any chatbot can understand "how tall is he?" and output a correct answer is technically fascinating.

Information architecture is optimizing content for natural language processing

A key concept in digital business—and know that I feel completely out of my depth referencing sports twice in one essay— is "Don't skate to where the puck is. Skate to where it's going to be." Don't plan for the present, especially in enterprise content strategy, where the targets aren't usually fast to move. Plan instead for two to five years out. Since Google pretty much owns the natural language processing and machine learning space, plan for where Google wants to be.

When the tech giants released their initial voice-powered search products, the writing was on the wall: Big tech want us to be able to talk with computers, factually and in-depth, about whatever subject we want. Whether or not people actually used voice search (they didn't unless "Alexa, play Taylor Swift!" counts) was irrelevant.

There's a great scene in Steven Soderbergh's Kimi (2022) where the protagonist Angela does her job: she's a machine learning trainer, troubleshooting for spoken phrases that the Siri-like assistant Kimi couldn't process. Essentially she's training the model for natural language processing.

So, for years now, instead of optimizing for exact-match keywords, I've worked with clients on optimizing for key concepts. The process follows this thinking:

- Who is going to talk about this subject, and what will they most want to talk about? Search queries from keyword planner, query reports in Google Search Console, features like People Also Ask, and user interviews provide clues. (Otherwise marketing and sales stakeholders would insist that we only discuss the features and benefits of the products directly, not questions that real-world users might have.)

- How does each subject connect with others? What are the best ways, given the options we have, to build that connection?

- Do we have the content to connect the dots between subjects? If we were reading page-by-page, could we answer all our questions from reading the website through its natural information architecture? Did we need to build pathways or additional content?

- If someone were trying to find an answer without using the exact phrase or concept that maps to each page, would they still be able to find the answer, even if they landed on another page?

It's how I build information architecture for discovery, informed by SEO research, but certainly not beholden to it. It's more informed by editorial approaches, which empathize with a reader while also understanding the most common things people want to know. Most of the core concepts and important nouns are woven in the website's on-page navigation, rather than in-line links. They're built to avoid duplicate content, while still pointing users to the correct answers of their most common queries.

An information architecture with key concepts baked into navigation also tells people what a website does. It makes the content scannable without forcing users to scan a page of marketing copy, just as navigation should. A good menu is a website's table of contents.

Coincidentally (or not at all), using key concepts in a website's navigation helps immensely with search visibility... and, as it turns out, visibility within LLMs.

GEO, or generative engine optimization, is a wild goose chase

Until very recently, many SEO experts and optimization guidelines have focused on page-level optimizations rather than high impact signals like navigation. They've actively avoided changing navigation because it's not a "proven" ranking factor. Or because changing URLs is risky (but not impossible) for SEO. Or I don't know why, but they just don't touch the navigation or structure of the website.

But in skating to where the puck is going to be, because natural language processing has always been the endgame, it makes more sense to build a semantically accurate and navigable website based on editorial and user experience guidelines. Because people like to see a table of contents to orient themselves, leading to a better user experience... and because people create the technology that reads the websites, using well-established principles of research and design.

When I use LLMs, I'm not especially concerned with the quality of the outputs (I have learned to ignore that the writing is not very good). I am extremely concerned, however, with how each website is read and reflected by the machines.

Currently, SEO experts on LinkedIn are peddling "generative engine optimization" (GEO) and tactics like optimizing vector embeddings (keyword stuffing, but with math). They claim that focusing on reverse engineering content through looking at vectors and cosine similarity, rather than structuring good content up front will help clients get mentioned in LLM-based search results more often.

But the thing is... LLMs are already reading more like humans than like computers. How do I know this?

Well, for one, we have much more clarity on how machine learning works in 2025 than we did in 2013. Machine learning engineers are not hard to find, and most will happily explain how natural language processing works.

But, more importantly, the machines will tell us, without hallucination, exactly how they are reading our content. Because they are using a much older technology called language (you may have heard of it) to understand what they see on the page. Yes, there is all kinds of math in the process, but essentially they are reading the text of a website as humans read it.

Deep research models will tell you exactly how they read websites

This week I experimented with Gemini Deep Research, and for the first time in more than a year, I've been absolutely delighted in what I found in an LLM. Not because the research output was good (it was not). Not because the LLM delivered something to me quicker than I could write it myself (technically, yes, but I would never write it that way).

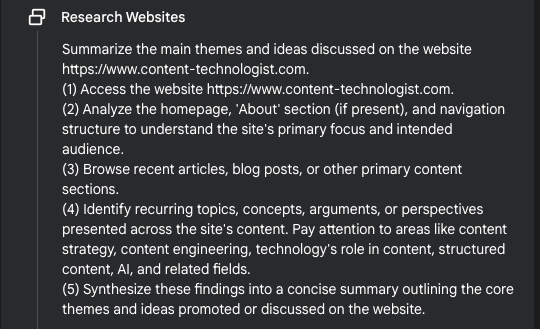

But because, when I request that it summarize the output of The Content Technologist, the LLM told me how it was programmed:

Check out those steps!

(2) Analyze the homepage, 'About' section (if present), and navigation structure to understand the site's primary focus and intended audience.

(3) Browse recent articles, blog posts, or other primary content sections.

(4) Identify recurring topics, concepts, arguments, or perspectives presented across the site's content.

Guess what, the LLM is looking at the words on the homepage, about page, and navigation structure as it reads the website.

We don't need to spend time mathematically proving how the machine processes content because it is literally telling us how it processes content. And before you say, "oh, the machines hallucinate," this text is a specific feature of Gemini Deep Research that acts more like a prompt. It's clearly pre-programmed in. Yes, LLMs hallucinate way too much, but this description of how the LLM searches, reads, and processes text is embedded in the model.

LLMs know a lot about machine learning because they are trained by machine learning engineers. It's likely all the base texts for machine learning are a part of the model. They can tell you more about the specifics of their inner workings than most journalists can.

For example, I asked ChatGPT "Who is Naz Reid?" and followed up with "How tall is he?" — and it answered correctly. When I asked how it knew what I was talking about in the follow-up, it outlined in great detail concepts like "coreference resolution" and "self-attention models," which are not hallucinated and easily corroborated from other texts.

When I asked it to explain those concepts in plain language, it said, "Every word is a little student," which is probably the best sentence I've ever seen an LLM write. (It's probably someone else's concept, aggregated, but that's an entirely different issue.) The words, even when abstracted in a math equation behind the scenes, are the core components, not the mathematical similarity of the outputs.

Language is the technology

When you study literature, communications, and journalism, you learn that you have to accept the text for what it is on the page. You have to use the quote you received from the sources. You can only read what's there, not what's not there. Yes, there are subtext and implied themes, but you discover those "scientifically" by reading the words that are there and saying, "these words mean this thing and here is how I know."

And one of the things you learn as a person working in the world is: When someone shows you who they are, believe them. If someone says they are doing something, you should take them at their word unless proven otherwise. (Like, if they publish their entire fascist plan on the internet, take them at their word that they will execute said fascist plan! But I digress...)

In this case, I take the program at its word. It was designed that way. No one working at Google is trying to trick us or lead us off the trail by claiming that the words in a website's navigation are important when they are not. Google's goal is to help us control the machines with our words, not our vector embeddings. The goal is to make the math invisible, which we can't do if we're engaging in the wild goose chase called "relevance engineering."

To put it another way: optimizing with GEO reverse engineering tactics is like entering a house through a small attic window. GEO ignores that the research frameworks literally embedded in the outputs of the model are the keys to the front door.

To reach more people, to build an audience, to speak to both people and algorithms, we need to rely on language as our technology first and foremost. We need to anticipate that people will want to know the heights of sports stars and celebrities. We need to anticipate the questions people will ask about a specific topic and give them insights about those they didn't think to ask.

It's the foundation of any editorial process.

Designing and optimizing editorially, by focusing on the connections between language first and quantification next-to-last, is skating to where the puck has been going for a long time. Information architecture and a readable website are the assist for the winning goal.

Content tech links of the week

- Content strategist John Collins on how content teams need to be thinking about other considerations besides creation, at least some of the time

- Do you think about URL structure a lot? I sure do! Here's Michael Soriano on UX considerations in URL design.

- The internet used to be fun is essentially a literature review of the changing nature of the internet (found in this week's Dense Discovery, a top-tier newsletter)

The Content Technologist is a newsletter and consultancy based in Minneapolis, working with clients and collaborators around the world. The entire newsletter is written and edited by Deborah Carver, independent content strategy consultant, speaker, and educator.

Affiliate referrals: Ghost publishing system | Bonsai contract/invoicing | The Sample newsletter exchange referral | Writer AI Writing Assistant

Cultural recommendations / personal social: Spotify | Instagram | Letterboxd | PI.FYI

Did you read? is the assorted content at the very bottom of the email. Cultural recommendations, off-kilter thoughts, and quotes from foundational works of media theory we first read in college—all fair game for this section.

It's hard to find joy these days, but I've gotten much joy from watching the Minnesota Timberwolves trounce the Los Angeles Lakers in the NBA playoffs. The whole team is brilliant, and after last night I've a newfound appreciation for Rudy Gobert.