Welcome, new subscribers! You've tuned in just in time to ground your data and, in a couple of weeks, to celebrate this newsletter's anniversary.

Some housekeeping notes:

- No newsletter next week, as it will be American Independence Day. I don't care much about the holiday itself, but I do care about relaxing with friends and eating grilled foods.

- It's your last chance for a preorder discount on Your Content Is Your Marketing, our new course about optimizing your website and newsletter content to be read by search engines, AI agents and chatbots, and other tools that use natural language processing to understand content. Get it before it launches on Monday!

After completing the course and its exercises, you'll have more control over how your website content appears in organic search results and AI summaries, configured to attract audiences actively looking to find your content. You'll also be able to optimize your website archives to attract subscribers with some low-lift—I promise—structured content.

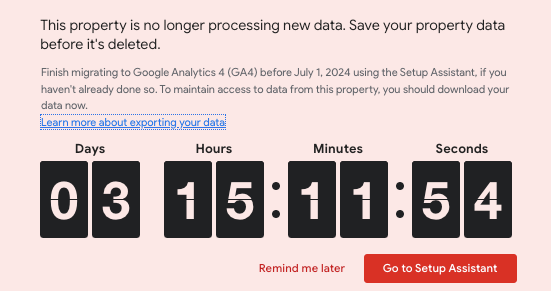

And after I put the finishing touches on these videos and materials, I'll transform into a semi-well-rested person once again. - Google's Universal Analytics, aka old GA, will be dissolved from existence as of Monday.

Most companies transitioned to GA4 a while ago, but if you'd like to preserve your historical data, I recommend exporting the following reports:

- Acquisition > All Traffic > Channels

- Acquisition > All Traffic > Referrals

- Behavior > Site Content > All pages

- Behavior > Site Content > Landing pages

The above reports can tell you, roughly, how audiences used to find your website and what pages were the most popular. Aim to export the two years of data before your company configured GA4, or if your account has many years of data remaining, set the date range all the way back to when you first started collecting analytics. Even if you just keep the CSV files in a backlog for reference, your past years of analytics data can show how much your digital content has grown and shifted.

- Finally! I spoke at the We Are Content Strategy and Design Meetup in Melbourne last night, and it was a blast! Thank you for having me, Melbourne. Check out the recording here.

If newsletters are the new blogs, Meco is the new catchall reader app.

Try Meco for freeSeeing is believing: Content performance attribution with your own, real, believable digital content metrics

Everyone else's numbers are inflated, but your own data is your gold. Learn to trust your owned data and make decisions with verifiable performance metrics.

If you work in the parallel frenemy hype bubbles of media and tech industries, it's quite possible that you believe "all the data is fake." Pageview data? Inflated! Clicks? They don't match up to the sessions! Rank? Smells pretty inaccurate to me! Impressions? The worst metric ever invented!

I'm not here to tell you all digital and media measurement data is real, because much of it is not. Most metrics that measure algorithmic visibility—impressions, clicks, rank, reach—are "calculated" by tech platforms for you, which means they are estimated and extrapolated based on how content is called by a retrieval system and not how many people actually looked at the content.

One might say the phenomenon is big tech overexaggerating its performance again, but as with most things tech vs. media, it's more of a both/and situation.

A brief history of a non-metric: CPM as the self-regulated storm

Impressions are, by and large, a media industry problem. In the 1990s, when newspapers, magazines, and 24-hour cable news were all raking in ad dollars, the metric of impressions was chosen to compare all media on an equal playing field. Impressions described the estimated number of times audience members could have seen ad placements, whether they were actively reading the newspaper page-by-page or just flipping through and glancing.

I have to refresh my personal knowledge of media history and look at a few books, since what's currently on the internet is rather basic, but from my understanding* the metric of CPM — cost per mille, or cost per 1,000 impressions — was introduced long before digital advertising. The metric was designed to help media buyers value their purchases across newspapers, magazines, radio, television, and outdoor (billboard) media. Each publication, channel, or venue would measure approximately how many people could have seen each impression based on subscriber bases, distribution estimations, and Nielsen's emerging system of broadcast measurement.

That way, a brand could measure approximately how many people maybe saw the same campaign on a billboard, a tv commercial, and in a magazine, then compare relevant sales to the campaign and say, "I guess it worked!" or "I guess it didn't!"

In the days when television options were limited, national broadcasters could reach massive audiences. Famously, the M*A*S*H* series finale aired in 1983 to an audience of 121.6 million, approximately 52% of the U.S. population at the time, the most popular TV event since the 1969 moon landing. (For comparison, it took nearly 2024's Super Bowl barely surpassed the M*A*S*H* numbers in absolutes, and approximately 35% of the U.S. population tuned in.)

When banner ads were created in the mid-1990s, the Internet Advertising Bureau also adopted the impression as its standard, so it could compare

1.) the internet was too niche, so not many people were using it

2.) unlike broadcast and print measurement, which could only estimate impressions based on third-party association-level audits, counting digital ads was much easier. After all, computers are calculators! If there's one thing a computer can do, it's count (unless it's an LLM).

Because so few people were using the internet and so few websites were carrying advertisements, banner advertising —now known as display advertising— early internet CPMs were extremely low, and publishers running ads didn't make much money.

Most online display advertising (banner ads) are still sold on a CPM basis. The programmatic ad units weighing down every website are sold on CPM.

The disadvantage of CPM is that, as opposed to the early days of the internet, in 2024 it's quite easy to attract fake traffic and juice up CPMs. An impression just means that an ad has loaded, not that it's been seen or registered by a person.

Magazines and newspapers are rumored to have similarly artificially inflated their impression numbers. A classic magazine impression estimation tactic is to say, "2,000 local dentist offices subscribe to our publication, and we estimate that each office has 40 people looking through the magazine each day, and there are 22 days a month, so each ad receives 1.76 million impressions." It's a metric that cannot be validated, no matter which medium it's used to measure.

*I learned most of the above on the job (h/t to my former coworker Christine Campbell, whose presentation on the M*A*S*H* finale I've never forgotten), from books in grad school, from Matt Locke's How to Measure Ghosts, or through digital ephemera, and when I write a dissertation on the history of advertising, I swear I'll fact-check it. So.... take me as an informed grain of salt and feel free to correct if I've gotten it wrong. I will write a correction!

Solving the Wanamaker problem: Direct response and cost-per-click

Impressions are not the only way to sell advertising. Before the internet, direct mail marketing developed and fostered direct response. Direct response, or DR, campaign success could be judged on the number of people who replied to a mailer with an order or request for information. Instead of estimating the number of people who may have seen an ad, advertisers could see the responses of interested potential customers.

When Google started selling search-based advertising in the early 2000s, they knew that, compared with tv and newspapers, the sad number of impressions of people viewing banner ads on the internet wouldn't help much. So they sold ads based on direct response: in the pay-per-click/cost-per-click/cost-per-conversion (PPC/CPC) system, advertisers were not charged based on how many times the ad was seen, but instead how many times a user clicked the ad. Compared with banner ads, which very few people ever click on, CPC demonstrated reduced spend and direct revenue growth.

When I started working in digital marketing, Google's PPC was doing gangbusters. Even though I never worked in paid search, the campaigns in the early 2010s were cheap and effective for advertisers. Audiences used the internet to shop, and they started shopping with a search on Google. Google's direct response ads demonstrated immense value for businesses that had only ever previously purchased impression-based media.

Google acquired the analytics firm Urchin (famous for the Urchin Tracking Module or UTM code), which enabled the search engine to could tie ad performance to ecommerce sales. And thus the era of attribution began: Ads were sold on Google's search engine, websites were optimized for organic search, Google Analytics loosely identified where the conversions originated, and suddenly advertisers had more marketing performance data then they'd ever dreamed of.

John Wanamaker famously said, "Half the money I spend on advertising is wasted; the trouble is I don't know which half." But in the early 2010s, Google had basically convinced digital advertisers they had solved that problem by demonstrating, directly, how each dollar originated online.

Navigating the analytics and attribution morass

Unfortunately, print and broadcast media weren't able to prove such immediate results, and they clung to the CPM model. There's a bit of coastal animosity as well: The East Coast advertising industry didn't like the disruption of Silicon Valley's new model. After all, they had bigger audiences and the advantage in bigger impression numbers.

Which brings us to where we are now: An absolute analytics clusterfuck, where the numbers never seem to add up.

Impressions only count how many times ads are served, not how many times they are viewed. Pageviews don't accurately track engagement from high-value audiences. Cost-per-conversion doesn't track brand awareness, a major contributing factor in business performance.

Marketing attribution is seen as the end-all-be-all of tracking, but firms fail to realize that even when attribution is properly set up with the best of UTM tracking, channel groupings that come through analytics are a rough estimate at best. Many companies also forget to benchmark performance when paid advertising is not in market, meaning that they're never tracking how well their website asset on its own supports the bottom line.

Advertising and marketing agencies operate in their own best interest, which is to highlight the metrics that make them look best. Businesses don't know who to believe when they receive third-party digital reporting. And when agencies are trafficking spend on hundreds of thousands of dollars on programmatic display, no one bothers to acknowledge the role of organic content in supporting both CPC and CPM ad placements.

If you're a media buyer, you know how to distribute a client's spend among a CPM and PPC mix, but for most of us, it's overcomplicated and inaccurate.

But! Today we are going to make it less so! Because today we are going to ground your analytics in a source of truth.

Learning to trust your owned data: Developing your business' grounded source of truth

Here's where I go on the record to say: I don't trust Google as a company, for the simple fact that they are a giant corporation and a monopoly. But!

In a decade of looking at digital client analytics across more than 40 industries and myriad measurement and tracking programs, Google Analytics provides the most accurate representation of digital performance and attribution when used correctly. How do I know this?

Because I was responsible for monthly and quarterly results for clients for years, and I watched the dollars come in the door. I saw the quality subscribers roll across the transoms. And I know how to measure cause and effect. Also, I've had really amazing training from incredibly talented media buyers and measurement strategists.

Because more than advertising tracking platforms, more than competitive analysis tools, more than email open rates, more than rank tracking and social media engagement tracking and whatever other invented metric someone's come up with, with Google Analytics, I can tie the end data to a source of truth.

That said! Any analytics program that enables your business to track website conversions, or meaningful audience behaviors, tied to real data that you can see—trust that analytics program!

Because yes, all the numbers are sketchy. The impression numbers are inflated and the clicks and sessions may or may not be from robots.

Except for the numbers that you can count yourself. Those numbers are mostly trustworthy. More trustworthy than third-party sources, anyway.

So! Here's how you can start trusting your data.

Step one: Pick a source of truth.

Your source of truth is data that you own, see, and verify. Two verifiable data sources are more impactful than any others:

- Revenue, usually from product, subscription, or ticket sales

- Email address collection, where you can determine the amount and quality of email addresses as they are registered in your system

It's critical to be able to verify the source of incoming information outside of GA4 or your chosen analytics platform. You need to be able to see that yes, these dollars or this information is actually coming in the door in the third-party source, matching (or nearly matching) what Google says.

With current privacy regulations, not every single visitor is going to be trackable, and that's ok. Currently, when comparing with clients, we've determined that GA4 ecommerce tracking picks up around 95% of website transactions, even from the most privacy-focused users.* As long as your analytics numbers represent a significant portion of your conversions.

*Warning! I have only conducted revenue comparisons with U.S. clients. Although the data seems stable for some European clients, do check your owned data with GA4 to see whether Google is still accurately tracking revenue or email address collection compared with your own records.

Step two: Track that source of truth as a key event in Google Analytics 4 or your other chosen analytics platform.

Having a source-of-truth metric only works if you are tracking it. Make sure that you are actually tracking the events on your website that match to conversions with GA4. Because it's not enough to just track them in your owned data; you need to tie these human actions to digital metrics.

Usually it takes under an hour to configure purchase tracking and newsletter sign-ups. I'm not going to give you explicit instructions here cos it's getting long, but check out these links:

Step three: Take your "before" benchmark.

Because purchase and key event tracking does not activate retroactively, it's important to get a sense of a "Control group" before your experiment. Ideally, you'll track a baseline metric of purchases or emails collected for at least a week before running any advertisements or making any website changes.

Most digital experiments or data read-outs lack a control group, making them absolutely meaningless as data science. But taking a "before" benchmark creates your digital performance baseline.

If you are already tracking purchase or email data, track the average monthly performance of all the below metrics across an entire year. If not, try to go at least a month between adding event tracking and implementing performance changes

Create a benchmark for the following GA4 metrics, or their counterparts in your platform:

- Users

- New users

- Sessions

- Engaged sessions

- Engagement rate

- Average engagement time

- Average revenue per user (if you are tracking revenue)

- Your key events (sign-ups or purchase revenue)

Pull the data in all the above metrics for the following reports in your desired time period:

- Engagement > Page / screen

- Engagement > Landing page

- Acquisition > User acquisition — primary channel grouping

These three reports will help you create a rough benchmark for where your traffic and conversions are coming from. Questions to ask when taking a benchmark:

- Which channels drive the most absolute traffic?

- Which pages/channels drive the most key events or purchases?

- Which have the highest engagement rate?

- Which landing pages are the most popular and have the highest engagement?

Step four: Make your changes.

Now! Let's do something. Run an ad campaign! Send a press release! Publish a bunch of content updates! Shift your call to action! Do your experiment!

For some experiments on large websites, you may only want to experiment on a small portion of pages rather than making giant global site. Work with your teams and analysts as they watch results as well.

Watch your source of truth as the campaign runs or as your changes take effect. Watch as key events rise and fall based on what you're spending or what channels you're using. Look at how content changes have affected the key event rates of specific pages. But don't jump to any conclusions.

And look at your other metrics, the ones that are not key events. Does a rise in engagement rate correlate with your changes or campaigns? As long as your verifiable source-of-truth human events are accurately reporting their data, the correlating changes in other metrics are believable as well.

When you add subheads to a page, does engagement time get longer? Do more people become subscribers?

Or, if you make changes that have positively impacted Google Search visibility, are you seeing a correlated change in those source-of-truth key events?

If you're implementing content changes (especially minor updates) without paid media support, it may be a couple of months before you see an impact on organic search. Changes from content take much longer to become evident than the immediacy of ad spend.

Step five: Measure your effects.

After several months, pull all the exact same benchmarks as before, and read your data like a pro. Look at what has changed in the before-and-after. Do changes in channel performance match the efforts or spend you put into that channel?

Over time, compare changes in your sources of truth to other metrics. Does a 5% increase in newsletter subscribers correlate with shifts in traffic from organic search? Do you see more activity or higher quality data/more revenue from your source-of-truth metrics when you are actively posting on social media than when you are not? Does your organic content still attract quality visitors when the paid spend is turned off?

You may not know exactly how different channels work together just yet. It takes a while to understand how your edits and updates affect performance down the line. But as you track against a verifiable data source, you'll begin to trust the digital data you see and understand how it affects the bottom line.

And you'll never judge performance based on impressions again!

But first!

How is your content performing so far this year?

Reserve your July analytics collabContent tech links of the week

- Is it you, me, or the interface? I love this piece from Alexander Obenauer about how we take our interface ngstodesign for granted.

- As reported here from Consequence of Sound, MTVNews destroyed its archive, and it's deeply upsetting. If you weren't familiar, MTVNews.com was an excellent website back in the glory days of the experimental web, and I know a lot of writers whose work was lost completely with Paramount's cost-cutting decision. Keep websites alive!

- Do we need language to think? The New York Times shattered my worldview (but boosted my understanding of knowledge graphs!) with this research that indicates language and thought are processed in totally separate parts of the brain.

- Inside sci-fi author Ursula K. LeGuin's website, from the always lovely Dirt.

- Content strategist Jane Ruffino argues that Nobody wants to use any software, and I'm not going to quibble.

- A new-to-me concept: The progressive disclosure of complexity in user experience explained by strategist Jason Lengstorf.

- The Verge explains in great complexity Netflix's challenge of encoding video so that it looks good. I would love to see more explain from The Verge and other tech outlets.

- You know it's bad when Digiday is skeptical of digital ads: Everyone at Cannes is realizing how wasteful programmatic ads are.

- And finally, we're included in a very nice list of women covering Indie Publishing, created by Journalism.co.uk. I'm particularly chuffed since I am not from the U.K., and it's such good company to be in. Cheers! And add all of us to your list of media crit reads!

The Content Technologist is written by Deborah Carver, an independent consultant based in Minneapolis. It is edited by Arikia Millikan.

Collaborate | Twitter | Manage your subscription

Affiliate referrals: Ghost publishing system | Bonsai contract/invoicing | The Sample newsletter exchange referral | Writer AI Writing Assistant

Cultural recommendations / personal social: Spotify | Instagram | Letterboxd

Did you read? is the assorted content at the very bottom of the email. Cultural recommendations, off-kilter thoughts, and quotes from foundational works of media theory we first read in college—all fair game for this section.

I am late for a party today, so I really hope my read through edits were thorough and deeply apologize for any typos or missed sentences. I'm sure Lorde and Charli XCX can relate.